At meta-testing, we apply this algorithm to learn a near-optimal policy.

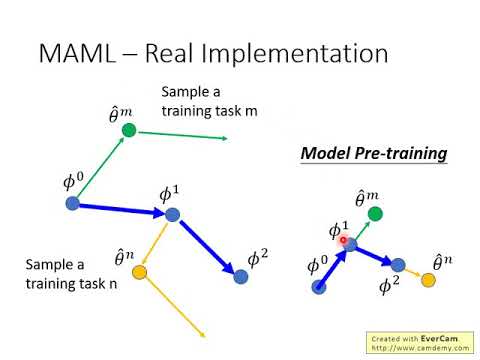

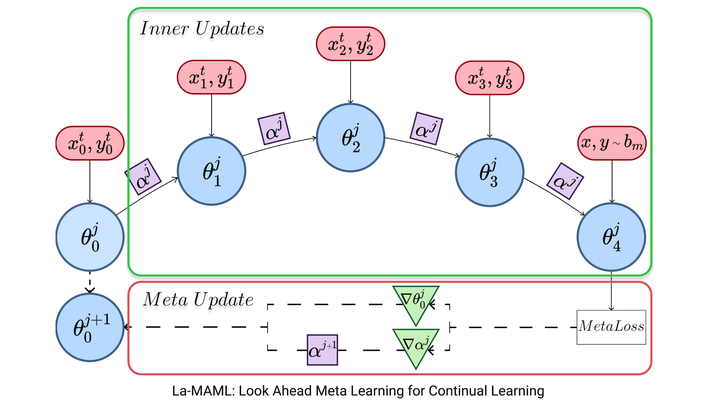

The goal of Meta-RL is to learn to leverage prior experience to adapt quickly to new tasks.Meta-Environments are associated with a distribution of distinct MDPs called tasks.Klaus Obermayer provided feedback on the project.In our introduction to meta-reinforcement learning, we presented the main concepts of meta-RL: He also wrote parts of the manuscript and helped with finalizing the document. Thomas Goerttler came up with the idea and sketched out the project. Both wrote the introduction together and contributed most of the text of the other parts. Max Ploner created the visualization of iMAML and the svelte elements and components. Luis Müller implemented the visualization of MAML, FOMAML, Reptile and the Comparision. Method, as well as explore some of the concepts interactively. In the next section, we will take a close look at MAML and study the math behind the Meta-learning and task-specific concepts. Meta Networks utilize a base learner (task level) and a meta learnerĪs well as a set of slow and rapid weights to allow Predictions using a small number of samples. Memory-Augmented Networks (MANNs) use external memory to make accurate Of a sample then determines the prediction. The nearest neighbor (i.e., the closest prototype) Prototypical Networks use prototype vectors to represent each class Though the second version of the paper is cited here, They do so by utilizing an attention kernel. Matching Networks also work by comparing new samples to labeledĮxamples. The result (accuracy of 88.1%) results from a reimplementation of the method Siamese Nets consist of two identical networks which produce a latent representation.įrom the representations of two samples, a distance is calculated toĪssess the similarity of the two samples. While the accuracy was greatly increased, it also Primitive motor elements and called this process "Hierarchical The same authors improved the model by learning latent Interesting approach, it can hardly be generalized to other (including the number and directions of strokes). The model is based on a latent stroke representation The generative stroke model was introduced in the paper, which also If not stated differently, you see the results of 20-way 1-shot, but someĭifferences in the evaluation procedure exist.Īs usual, accuracy numbers need to be taken with a grain of salt asĭifferences in the evaluation method, implementation, and modelĬomplexity may have a non-negligible impact on the performance. This figure shows the results of different methods on the Omniglot dataset. There is also optimized-based meta-learning, of which Learning, their performance on Omniglot, as well as your own accuracy score from the starting page. In the following figure, you can find a selection of meta-learning methods

This has the great benefit of being applicable not only toĬonventional supervised learning classification tasks but also You choose the model architecture freely. Optimization, which is what makes it "optimization-based".Īs a consequence, unlike metric-based and model-based approaches, MAML lets Your usual model might be (in the many-shot case). MAML goes a different route: The neural network is designed the same way Both employĪn external memory while still maintaining the ability to be trained Memory-Augmented Neural Networks and MetaNet are two examples. Model-based approaches are neural architectures that are deliberately designedįor fast adaption to new tasks without an inclination to overfit. Supposed to have a large distance (the notion of a distance makes To be close to each other, while two samples from different classes are Latent (metric) space: In this space, samples of the same class are supposed The core idea of metric-based approaches is to compare two samples in a Include the optimization of the task-level optimizer using Applications of Meta-Learning outside the domain of few-shot learning

0 kommentar(er)

0 kommentar(er)